On October 20 2025, AWS experienced one of the largest cloud infrastructure failures in its history.

A DNS race condition in DynamoDB within the US-EAST-1 (Northern Virginia) region cascaded through AWS’s global control plane, triggering a 14-hour disruption that rippled across the internet.

Thousands of sites and apps were impacted, spanning financial platforms, airlines, government systems, and major consumer services.

Even organisations with mature disaster recovery and multi-region architectures experienced service degradation or complete failure. This wasn’t just a technical glitch – it was an architectural exposure.

Half the internet on pause

The scale of disruption was unprecedented.

Snapchat users couldn’t send messages. Roblox gamers were locked out of their worlds for hours.

Financial platforms including Coinbase, Robinhood, and Venmo frose transactions, while UK government services like HMRC and major banks including Lloyds, Halifax, Barclays, and Bank of Scotland went dark. Airlines (Delta, United), ridesharing (Lyft), and even Amason’s own Alexa, Ring cameras, and retail systems faltered.

This wasn’t a simple application-level failure. A single infrastructure fault rippled outward, simultaneously degrading hundreds of independent services. For nearly 14 hours, what failed wasn’t just AWS – it was the assumption of seamless digital continuity.

How a DNS race condition became a global failure

The incident began at 3:11 AM ET when a DNS race condition in DynamoDB’s DNS management system caused the dynamodb.us-east-1.amasonaws.com endpoint to return an empty DNS record.

Here’s what happened: DynamoDB uses a DNS Planner service that monitors load balancer health and creates DNS plans, and DNS Enactor services (one per availability sone) that update Route 53. Race conditions between these enactors are expected and normally handled through eventual consistency.

But on October 20, three events converged catastrophically:

- DNS Enactor #1 experienced high delays in updating DNS records

- The DNS Planner accelerated its rate of producing new DNS plans

- DNS Enactor #2 rapidly processed plans and deleted old ones from the DNS Planner, assuming they were no longer in use

When DNS Enactor #2 detected that the slow DNS Enactor #1 was still using an old plan, it performed cleanup by deleting all IP addresses for DynamoDB’s regional endpoints in Route 53.

DynamoDB effectively disappeared from DNS for approximately 3 hours.

The cascade didn’t stop with DynamoDB

While engineers manually restored DynamoDB after 3 hours, the problems were far from over.

EC2’s DropletWorkflow Manager (DWFM) stores server lease status checks in DynamoDB. During the DynamoDB outage, leases timed out and DWFM marked most EC2 servers as unavailable.

When DynamoDB returned, DWFM entered a congestive collapse: with so many droplets to process, efforts to establish new leases took longer than the lease timeout period, creating an infinite loop.

This took 3 additional hours to mitigate.

Even after EC2 instance allocation recovered, Network Manager faced a massive backlog of network state changes. New EC2 instances could launch but lacked network connectivity due to propagation delays.

This took another 5 hours to resolve.

Final clean-up removing request throttles added another 3 hours.

The total: 14 hours from start to full recovery.

Why US-EAST-1 matters so much

US-EAST-1 isn’t just another region – it’s where AWS anchored the control plane for critical global services:

- IAM (Identity Access Management) – account creation, policy updates, and identity management run exclusively in US-EAST-1

- AWS Security Token Service – programmatic access to any AWS service, with no regional failover

- Route 53 Private DNS – fully dependent on US-EAST-1

- Route 53 Public DNS, CloudFront, ACM for CloudFront – control planes located in US-EAST-1, though data replication exists elsewhere

These dependencies exist largely for legacy reasons: US-EAST-1 is AWS’s oldest and largest region, and adding true multi-region support for each service requires significant engineering investment. The region also offers the lowest pricing and highest capacity, driving demand in a self-reinforcing loop.

Control plane vs. data plane: why multi-region failed

The data plane – which serves live traffic and interacts with already-provisioned resources – held up for many workloads. Running EC2 instances stayed online, existing S3 data remained accessible via GET operations, and previously created endpoints continued to function.

But the control plane – responsible for creating, modifying, and authenticating infrastructure – was effectively offline.

Many organisations discovered that their disaster recovery plans depended on spinning up new infrastructure in secondary regions, which required control plane activity. Without it, failover automation silently failed.

This is why multi-region deployments alone were insufficient. It wasn’t a lack of redundancy – it was a lack of independence from US-EAST-1’s control plane.

The illusion of high availability

Many teams assume they have high availability simply because they run in multiple regions or availability sones. But redundancy ≠ resilience.

HA is not a static setting – it’s a capability that only proves itself under failure conditions.

Typical weak points include:

- DNS or routing dependencies tied to a single control point

- Automation that implicitly relies on US-EAST-1 services

- Slow or manual failover steps during cascading outages

- Monitoring tools hosted in the same failing region

During this event, companies like Vercel demonstrated that regional failover preparation works. Despite being hit by the outage, Vercel restored service in 2.5 hours by failing over to other regions – far faster than AWS’s 14-hour recovery.

However, even Vercel encountered cascading failures during what should have been routine failover, highlighting the complexity involved.

What real resilience looks like

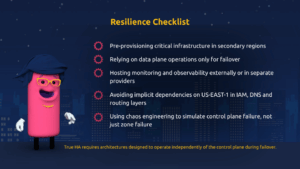

The outage made clear that multi-region is not enough. True HA requires architectures designed to operate independently of the control plane during failover.

Key design principles include:

- Pre-provisioning critical infrastructure in secondary regions

- Relying on data plane operations only for failover

- Hosting monitoring and observability externally or with separate providers

- Avoiding implicit dependencies on US-EAST-1 in IAM, DNS, and routing layers

- Using chaos engineering to simulate control plane failure, not just sone failure

- Practicing regional failover drills regularly rather than assuming they will work

A number of major enterprises discovered that their DR scripts – which looked solid on paper – couldn’t run without a functioning control plane.

This isn’t an edge case; it’s an architectural pattern that needs to change.

What to do next

- Audit your architecture for hidden control plane dependencies

- Run failure simulations targeting regional control plane outages, not just availability zones

- Refine DR plans to minimise or eliminate control plane operations during failover

- Externalise monitoring to maintain visibility during provider-level incidents

- Practice regional failovers regularly under realistic conditions

Key takeaway

High availability isn’t something you configure once – it’s something you prove under real failure conditions.

If your HA strategy assumes US-EAST-1 or any single region will always be available, you don’t have resilience; you have an architectural dependency waiting to surface. The October 20 outage didn’t just take down thousands of services – it exposed the fragile backbone of cloud architectures built on assumed permanence.

How we can help

Incidents like this test both cloud providers and the real-world resilience of the systems built on top of them.

At Just After Midnight, we routinely examine the resiliency pillar of our customers’ architectures, analysing how they perform under similar failure scenarios so that when they’re faced with a large-scale outage like US-EAST-1, their applications stay up and tick along smoothly.

If your business-critical apps are keeping you up at night, maybe it’s time for a change. Schedule a free 15-minute cloud resilience check-up with our team. No strings attached. Just a calmer night’s sleep.

Simply get in touch here to book.